DANIEL C. DENNETT

BREAKING THE SPELL

RELIGION AS A NATURAL PHENOMENON

2006

FOR SUSAN

Contents

2. A working definition of religion

5. Religion as a natural phenomenon

2 Some Questions About Science

1. Can science study religion?

2. Should science study religion?

3. Might music be bad for you?

4. Would neglect be more benign?

3. Asking what pays for religion

4. A Martian's list of theories

PART II. THE EVOLUTION OF RELIGION

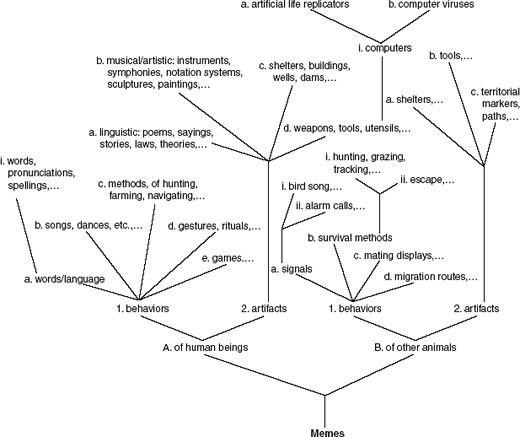

2. The raw materials of religion

3. How Nature deals with the problem of other minds

1. Too many agents: competition for rehearsal space

3. Getting the gods to speak to us

5. Memory-engineering devices in oral cultures

6 The Evolution of Stewardship

2. Folk religion as practical know-how

3. Creeping reflection and the birth of secrecy in religion

4. The domestication of religions

7 The Invention of Team Spirit

1. A path paved with good intentions

2. The ant colony and the corporation

3. The growth market in religion

3. The division of doxastic labor

4. The lowest common denominator?

5. Beliefs designed to be professed

6. Lessons from Lebanon: the strange cases of the Druze and Kim Philby

9 Toward a Buyer's Guide to Religions

3. Why does it matter what you believe?

4. What can your religion do for you?

1. Does religion make us moral?

2. Is religion what gives meaning to your life?

3. What can we say about sacred values?

4. Bless my soul: spirituality and selfishness

2. Some avenues to explore: how can we home in on religious conviction?

3. What shall we tell the children?

Appendixes

B Some More Questions About Science

C The Bellboy and the Lady Named Tuck

D Kim Philby as a Real Case of Indeterminacy of Radical Interpretation

Preface

Let me begin with an obvious fact: I am an American author, and this book is addressed in the first place to American readers. I shared drafts of this book with many readers, and most of my non-American readers found this fact not just obvious but distracting — even objectionable in some cases. Couldn't I make the book less provincial in outlook? Shouldn't I strive, as a philosopher, for the most universal target audience I could muster? No. Not in this case, and my non-American readers should consider what they can learn about the situation in America from what they find in this book. More compelling to me than the reaction of my non-American readers was the fact that so few of my American readers had any inkling of this bias — or, if they did, they didn't object. That is a pattern to ponder. It is commonly observed — both in America and abroad — that America is strikingly different from other First World nations in its attitudes to religion, and this book is, among other things, a sounding device intended to measure the depths of those differences. I decided I had to express the emphases found here if I was to have any hope of reaching my intended audience: the curious and conscientious citizens of my native land — as many as possible, not just the academics. (I saw no point in preaching to the choir.) This is an experiment, a departure from my aims in earlier books, and those who are disoriented or disappointed by the departure now know that I had my reasons, good or bad. Of course I may have missed my target. We shall see.

My focus on America is deliberate; when it comes to contemporary religion, on the other hand, my focus on Christianity first, and Islam and Judaism next, is unintended but unavoidable: I simply do not know enough about other religions to write with any confidence about them. Perhaps I should have devoted several more years to study before writing this book, but since the urgency of the message was borne in on me again and again by current events, I had to settle for the perspectives I had managed to achieve so far. One of the departures from my previous stylistic practices is that for once I am using endnotes, not footnotes. Usually I deplore this practice, since it obliges the scholarly reader to keep an extra bookmark running while flipping back and forth, but in this instance I decided that a reader-friendly flow for a wider audience was more important than the convenience of scholars. This then let me pack rather more material than usual into rather lengthy endnotes, so the inconvenience has some recompense for those who are up for the extra arguments. In the same spirit, I have pulled four chunks of material meant mainly for academic readers out of the main text and deposited them at the end as appendixes. They are referred to at the point in the text where otherwise they would be chapters or chapter sections.

Once again, thanks to Tufts University, I have been able to play Tom Sawyer and the whitewashed fence with a remarkably brave and conscientious group of students, mostly undergraduates, who put their own often deeply held religious convictions on the line, reading an early draft in a seminar in the fall of 2004, correcting many errors, and guiding me into their religious worlds with good humor and tolerance for my gaffes and other offenses. If 1 do manage to find my target audience, their feedback deserves much of the credit. Thank you, Priscilla Alvarez, Jacquelyn Ardam, Mauricio Artinano, Gajanthan Balakaneshan, Alexandra Barker, Lawrence Bluestone, Sara Brauner, Benjamin Brooks, Sean Chisholm, Erika Clampitt, Sarah Dalglish, Kathleen Daniel, Noah Dock, Hannah Ehrlich, Jed Forman, Aaron Goldberg, Gena Gorlin, Joseph Gulezian, Christopher Healey, Eitan Hersh, Joe Keating, Matthew Kibbee, Tucker Lentz, Chris Lintz, Stephen Martin, Juliana McCanney, Akiko Noro, David Polk, Sameer Puri, Marc Raifman, Lucas Recchione, Edward Rossel, Ariel Rudolph, Mami Sakamaki, Bryan Salvatore, Kyle Thompson-Westra, and Graedon Zorzi.

Thanks also to my happy team in the Center for Cognitive Studies, the teaching assistants, research assistants, research associate, and program assistant. They commented on student essays, advised students who were upset by the project, advised me; helped me devise, refine, copy, and translate questionnaires; entered and analyzed data; retrieved hundreds of books and articles from libraries and Web sites; helped one another, and helped keep me on track: Avery Archer, Felipe de Brigard, Adam Degen Brown, Richard Griffin, and Teresa Salvato. Thanks as well to Chris Westbury, Diana Raffman, John Roberts, John Symons, and Bill Ramsey for their participation at their universities in our questionnaire project, which is still under way, and to John Kihlstrom, Karel de Pauw, and Marcel Kinsbourne for steering me to valuable reading.

Special thanks to Meera Nanda, whose own brave campaign to bring scientific understanding of religion to her native India was one of the inspirations for this book, and also for its title. See her book Breaking the Spell of Dharma (2002) as well as the more recent Prophets Facing Backwards (2003).

The readers mentioned in the first paragraph include a few who have chosen to remain anonymous. I thank them, and also Ron Barnette, Akeel Bilgrami, Pascal Boyer, Joanna Bryson, Tom Clark, Bo Dahlbom, Richard Denton, Robert Goldstein, Nick Humphrey, Justin Junge, Matt Konig, Will Lowe, Ian Lustick, Suzanne Massey, Rob McCall, Paul Oppenheim, Seymour Papert, Amber Ross, Don Ross, Paul Seabright, Paul Slovak, Dan Sperber, and Sue Stafford. Once again, Terry Zaroff did an outstanding copyediting stint for me, picking up not just stylistic slips but substantive weaknesses as well. Richard Dawkins and Peter Suber are two who provided particularly valuable suggestions in the course of conversations, as did my agent, John Brockman, and his wife, Katinka Matson, but let me also thank, without naming them, the many other people who have taken an interest in this project over the last two years and provided much-appreciated suggestions, advice, and moral support.

Finally, I must once again thank my wife, Susan, who makes every book of mine a duet, not a solo, in ways I could never calculate.

Daniel Dennett

PART I

OPENING PANDORA'S BOX

CHAPTER ONE

Breaking Which Spell?

1. What's going on?

And he spake many things unto them in parables, saying, Behold, a sower went forth to sow; And when he sowed, some seeds fell by the way side, and the fowls came and devoured them up. — Matthew 13:3-4

If "survival of the fittest" has any validity as a slogan, then the Bible seems a fair candidate for the accolade of the fittest of texts. — Hugh Pyper, "The Selfish Text: The Bible and Memetics"

You watch an ant in a meadow, laboriously climbing up a blade of grass, higher and higher until it falls, then climbs again, and again, like Sisyphus rolling his rock, always striving to reach the top. Why is the ant doing this? What benefit is it seeking for itself in this strenuous and unlikely activity? Wrong question, as it turns out. No biological benefit accrues to the ant. It is not trying to get a better view of the territory or seeking food or showing off to a potential mate, for instance. Its brain has been commandeered by a tiny parasite, a lancet fluke (Dicrocelium dendriticum), that needs to get itself into the stomach of a sheep or a cow in order to complete its reproductive cycle. This little brain worm is driving the ant into position to benefit its progeny, not the ant's. This is not an isolated phenomenon. Similarly manipulative parasites infect fish, and mice, among other species. These hitchhikers cause their hosts to behave in unlikely — even suicidal — ways, all for the benefit of the guest, not the host.1

Does anything like this ever happen with human beings? Yes indeed. We often find human beings setting aside their personal interests, their health, their chances to have children, and devoting their entire lives to furthering the interests of an idea that has lodged in their brains. The Arabic word islam means "submission," and every good Muslim bears witness, prays five times a day, gives alms, fasts during Ramadan, and tries to make the pilgrimage, or hajj, to Mecca, all on behalf of the idea of Allah, and Muhammad, the messenger of Allah. Christians and Jews do likewise, of course, devoting their lives to spreading the Word, making huge sacrifices, suffering bravely, risking their lives for an idea. So do Sikhs and Hindus and Buddhists. And don't forget the many thousands of secular humanists who have given their lives for Democracy, or Justice, or just plain Truth. There are many ideas to die for.

Our ability to devote our lives to something we deem more important than our own personal welfare — or our own biological imperative to have offspring — is one of the things that set us aside from the rest of the animal world. A mother bear will bravely defend a food patch, and ferociously protect her cub, or even her empty den, but probably more people have died in the valiant attempt to protect sacred places and texts than in the attempt to protect food stores or their own children and homes. Like other animals, we have built-in desires to reproduce and to do pretty much whatever it takes to achieve this goal, but we also have creeds, and the ability to transcend our genetic imperatives. This fact does make us different, but it is itself a biological fact, visible to natural science, and something that requires an explanation from natural science. How did just one species, Homo sapiens, come to have these extraordinary perspectives on their own lives?

Hardly anybody would say that the most important thing in life is having more grandchildren than one's rivals do, but this is the default summum bonum of every wild animal. They don't know any better. They can't. They're just animals. There is one interesting exception, it seems: the dog. Can't "man's best friend" exhibit devotion that rivals that of a human friend? Won't a dog even die if need be to protect its master? Yes, and it is no coincidence that this admirable trait is found in a domesticated species. The dogs of today are the offspring of the dogs our ancestors most loved and admired in the past; without even trying to breed for loyalty, they managed to do so, bringing out the best (by their lights, by our lights) in our companion animals.2 Did we unconsciously model this devotion to a master on our own devotion to God? Were we shaping dogs in our own image? Perhaps, but then where did we get our devotion to God?

The comparison with which I began, between a parasitic worm invading an ant's brain and an idea invading a human brain, probably seems both far-fetched and outrageous. Unlike worms, ideas aren't alive, and don't invade brains; they are created by minds. True on both counts, but these are not as telling objections as they first appear. Ideas aren't alive; they can't see where they're going and have no limbs with which to steer a host brain even if they could see. True, but a lancet fluke isn't exactly a rocket scientist either; it's no more intelligent than a carrot, really; it doesn't even have a brain. What it has is just the good fortune of being endowed with features that affect ant brains in this useful way whenever it comes in contact with them. (These features are like the eye spots on butterfly wings that sometimes fool predatory birds into thinking some big animal is looking at them. The birds are scared away and the butterflies are the beneficiaries, but are none the wiser for it.) An inert idea, if it were designed just right, might have a beneficial effect on a brain without having to know it was doing so! And if it did, it might prosper because it had that design.

The comparison of the Word of God to a lancet fluke is unsettling, but the idea of comparing an idea to a living thing is not new. I have a page of music, written on parchment in the mid-sixteenth century, which I found half a century ago in a Paris bookstall. The text (in Latin) recounts the moral of the parable of the Sower (Matthew 13): Semen est verbum Dei; sator autem Christus. The Word of God is a seed, and the sower of the seed is Christ. These seeds take root in individual human beings, it seems, and get those human beings to spread them, far and wide (and in return, the human hosts get eternal life — eum qui audit manebit in sternum).

How are ideas created by minds? It might be by miraculous inspiration, or it might be by more natural means, as ideas are spread from mind to mind, surviving translation between different languages, hitchhiking on songs and icons and statues and rituals, coming together in unlikely combinations in particular people's heads, where they give rise to yet further new "creations," bearing family resemblances to the ideas that inspired them but adding new features, new powers as they go. And perhaps some of the "wild" ideas that first invaded our minds have yielded offspring that have been domesticated and tamed, as we have attempted to become their masters or at least their stewards, their shepherds. What are the ancestors of the domesticated ideas that spread today? Where did they originate and why? And once our ancestors took on the goal of spreading these ideas, not just harboring them but cherishing them, how did this belief in belief transform the ideas being spread?

The great ideas of religion have been holding us human beings enthralled for thousands of years, longer than recorded history but still just a brief moment in biological time. If we want to understand the nature of religion today, as a natural phenomenon, we have to look not just at what it is today, but at what it used to be. An account of the origins of religion, in the next seven chapters, will provide us with a new perspective from which to look, in the last three chapters, at what religion is today, why it means so much to so many people, and what they might be right and wrong about in their self-understanding as religious people. Then we can see better where religion might be heading in the near future, our future on this planet. I can think of no more important topic to investigate.

2. A working definition of religion

Philosophers stretch the meaning of words until they retain scarcely anything of their original sense; by calling "God"some vague abstraction which they have created for themselves, they pose as deists, as believers, before the world; they may even pride themselves on having attained a higher and purer idea of God, although their God is nothing but an insubstantial shadow and no longer the mighty personality of religious doctrine. — Sigmund Freud, The Future of an Illusion

How do I define religion? It doesn't matter just how I define it, since I plan to examine and discuss the neighboring phenomena that (probably) aren't religions — spirituality, commitment to secular organizations, fanatical devotion to ethnic groups (or sports teams), superstition.... So, wherever I "draw the line," I'll be going over the line in any case. As you will see, what we usually call religions are composed of a variety of quite different phenomena, arising from different circumstances and having different implications, forming a loose family of phenomena, not a "natural kind" like a chemical element or a species.

What is the essence of religion? This question should be considered askance. Even if there is a deep and important affinity between many or even most of the world's religions, there are sure to be variants that share some typical features while lacking one or another "essential"feature. As evolutionary biology advanced during the last century, we gradually came to appreciate the deep reasons for grouping living things the way we do — sponges are animals, and birds are more closely related to dinosaurs than frogs are — and new surprises are still being discovered every year. So we should expect — and tolerate — some difficulty in arriving at a counterexample-proof definition of something as diverse and complex as religion. Sharks and dolphins look very much alike and behave in many similar ways, but they are not the same sort of thing at all. Perhaps, once we understand the whole field better, we will see that Buddhism and Islam, for all their similarities, deserve to be considered two entirely different species of cultural phenomenon. We can start with common sense and tradition and consider them both to be religions, but we shouldn't blind ourselves to the prospect that our initial sorting may have to be adjusted as we learn more. Why is suckling one's young more fundamental than living in the ocean? Why is having a backbone more fundamental than having wings? It may be obvious now, but it wasn't obvious at the dawn of biology.

In the United Kingdom, the law regarding cruelty to animals draws an important moral line at whether the animal is a vertebrate: as far as the law is concerned, you may do what you like to a live worm or fly or shrimp, but not to a live bird or frog or mouse. It's a pretty good place to draw the line, but laws can be amended, and this one was. Cephalopods — octopus, squid, cuttlefish — were recently made honorary vertebrates, in effect, because they, unlike their close mollusc cousins the clams and oysters, have such strikingly sophisticated nervous systems. This seems to me a wise political adjustment, since the similarities that mattered to the law and morality didn't line up perfectly with the deep principles of biology.

We may find that drawing a boundary between religion and its nearest neighbors among cultural phenomena is beset with similar, but more vexing, problems. For instance, since the law (in the United States, at least) singles out religions for special status, declaring something that has been regarded as a religion to be really something else is bound to be of more than academic interest to those involved. Wicca (witchcraft) and other New Age phenomena have been championed as religions by their adherents precisely in order to elevate them to the legal and social status that religions have traditionally enjoyed. And, coming from the other direction, there are those who have claimed that evolutionary biology is really "just another religion," and hence its doctrines have no place in the public-school curriculum. Legal protection, honor, prestige, and a traditional exemption from certain sorts of analysis and criticism — a great deal hinges on how we define religion. How should I handle this delicate issue?

Tentatively, I propose to define religions as social systems whose participants avow belief in a supernatural agent or agents whose approval is to he sought. This is, of course, a circuitous way of articulating the idea that a religion without God or gods is like a vertebrate without a backbone.3 Some of the reasons for this roundabout language are fairly obvious; others will emerge over time — and the definition is subject to revision, a place to start, not something carved in stone to be defended to the death. According to this definition, a devout Elvis Presley fan club is not a religion, because, although the members may, in a fairly obvious sense, worship Elvis, he is not deemed by them to be literally supernatural, but just to have been a particularly superb human being. (And if some fan clubs decide that Elvis is truly immortal and divine, then they are indeed on the way to starting a new religion.) A supernatural agent need not be very anthropomorphic. The Old Testament Jehovah is definitely a sort of divine man (not a woman), who sees with eyes and hears with ears — and talks and acts in real time. (God waited to see what Job would do, and then he spoke to him.) Many contemporary Christians, Jews, and Muslims insist that God, or Allah, being omniscient, has no need for anything like sense organs, and, being eternal, does not act in real time. This is puzzling, since many of them continue to pray to God, to hope that God will answer their prayers tomorrow, to express gratitude to God for creating the universe, and to use such locutions as "what God intends us to do" and "God have mercy," acts that seem to be in flat contradiction to their insistence that their God is not at all anthropomorphic. According to a long-standing tradition, this tension between God as agent and God as eternal and immutable Being is one of those things that are simply beyond human comprehension, and it would be foolish and arrogant to try to understand it. That is as it may be, and this topic will be carefully treated later in the book, but we cannot proceed with my definition of religion (or any other definition, really) until we (tentatively, pending further illumination) get a little clearer about the spectrum of views that are discernible through this pious fog of modest incomprehension. We need to seek further interpretation before we can decide how to classify the doctrines these people espouse.

For some people, prayer is not literally talking to God but, rather, a "symbolic" activity, a way of talking to oneself about one's deepest concerns, expressed metaphorically. It is rather like beginning a diary entry with "Dear Diary." If what they call God is really not an agent in their eyes, a being that can answer prayers, approve and disapprove, receive sacrifices, and mete out punishment or forgiveness, then, although they may call this Being God, and stand in awe of it (not Him), their creed, whatever it is, is not really a religion according to my definition. It is, perhaps, a wonderful (or terrible) surrogate for religion, or a former religion, an offspring of a genuine religion that bears many family resemblances to religion, but it is another species altogether.4 In order to get clear about what religions are, we will have to allow that some religions may have turned into things that aren't religions any more. This has certainly happened to particular practices and traditions that used to be parts of genuine religions. The rituals of Halloween are no longer religious rituals, at least in America. The people who go to great effort and expense to participate in them are not, thereby, practicing religion, even though their activities can be placed in a clear line of descent from religious practices. Belief in Santa Claus has also lost its status as a religious belief.

For others, prayer really is talking to God, who (not which) really does listen, and forgive. Their creed is a religion, according to my definition, provided that they are part of a larger social system or community, not a congregation of one. In this regard, my definition is profoundly at odds with that of William James, who defined religion as "the feelings, acts, and experiences of individual men in their solitude, so far as they apprehend themselves to stand in relation to whatever they may consider the divine" (1902, p. 31). He would have no difficulty identifying a lone believer as a person with a religion; he himself was apparently such a one. This concentration on individual, private religious experience was a tactical choice for James; he thought that the creeds, rituals, trappings, and political hierarchies of "organized" religion were a distraction from the root phenomenon, and his tactical path bore wonderful fruit, but he could hardly deny that those social and cultural factors hugely affect the content and structure of the individual's experience. Today, there are reasons for trading in James's psychological microscope for a wide-angle biological and social telescope, looking at the factors, over large expanses of both space and time, that shape the experiences and actions of individual religious people.

But just as James could hardly deny the social and cultural factors, I could hardly deny the existence of individuals who very sincerely and devoutly take themselves to be the lone communicants of what we might call private religions. Typically these people have had considerable experience with one or more world religions and have chosen not to be joiners. Not wanting to ignore them, but needing to distinguish them from the much, much more typical religious people who identify themselves with a particular creed or church that has many other members, I shall call them spiritual people, but not religious. They are, if you like, honorary vertebrates.

There are many other variants to be considered in due course — for instance, people who pray, and believe in the efficacy of prayer, but don't believe that this efficacy is channeled through an agent God who literally hears the prayer. I want to postpone consideration of all these issues until we have a clearer sense of where these doctrines sprang from. The core phenomenon of religion, I am proposing, invokes gods who are effective agents in real time, and who play a central role in the way the participants think about what they ought to do. I use the evasive word "invokes"here because, as we shall see in a later chapter, the standard word "belief tends to distort and camouflage some of the most interesting features of religion. To put it provocatively, religious belief isn't always belief. And why is the approval of the supernatural agent or agents to be sought? That clause is included to distinguish religion from "black magic" of various sorts. There are people — very few, actually, although juicy urban legends about "satanic cults" would have us think otherwise — who take themselves to be able to command demons with whom they form some sort of unholy alliance. These (barely existent) social systems are on the boundary with religion, but I think it is appropriate to leave them out, since our intuitions recoil at the idea that people who engage in this kind of tripe deserve the special status of the devout. What apparently grounds the widespread respect in which religions of all kinds are held is the sense that those who are religious are well intentioned, trying to lead morally good lives, earnest in their desire not to do evil, and to make amends for their transgressions. Somebody who is both so selfish and so gullible as to try to make a pact with evil supernatural agents in order to get his way in the world lives in a comic-book world of superstition and deserves no such respect.5

3. To break or not to break

Science is like a blabbermouth who ruins a movie by telling you how it ends. — Ned Flanders (fictional character on The Simpsons)

You're at a concert, awestruck and breathless, listening to your favorite musicians on their farewell tour, and the sweet music is lifting you, carrying you away to another place . . . and then somebody's cell phone starts ringing! Breaking the spell. Hateful, vile, inexcusable. This inconsiderate jerk has ruined the concert for you, stolen a precious moment that can never be recovered. How evil it is to break somebody's spell! I don't want to be that person with the cell phone, and I am well aware that I will seem to many people to be courting just that fate by embarking on this book.

The problem is that there are good spells and then there are bad spells. If only some timely phone call could have interrupted the proceedings at Jonestown in Guyana in 1978, when the lunatic Jim Jones was ordering his hundreds of spellbound followers to commit suicide! If only we could have broken the spell that enticed the Japanese cult Aum Shinrikyo to release sarin gas in a Tokyo subway, killing a dozen people and injuring thousands more! If only we could figure out some way today to break the spell that lures thousands of poor young Muslim boys into fanatical madrassahs where they are prepared for a life of murderous martyrdom instead of being taught about the modern world, about democracy and history and science! If only we could break the spell that convinces some of our fellow citizens that they are commanded by God to bomb abortion clinics!

Religious cults and political fanatics are not the only casters of evil spells today. Think of the people who are addicted to drugs, or gambling, or alcohol, or child pornography. They need all the help they can get, and I doubt if anybody is inclined to throw a protective mantle around these entranced ones and admonish, "Shhh! Don't break the spell!" And it may be that the best way to break these bad spells is to introduce the spellbound to a good spell, a god spell, a gospel. It may be, and it may not. We should try to find out. Perhaps, while we're at it, we should inquire whether the world would be a better place if we could snap our fingers and cure the workaholics, too — but now I'm entering controversial waters. Many workaholics would claim that theirs is a benign addiction, useful to society and to their loved ones, and, besides, they would insist, it is their right, in a free society, to follow their hearts wherever they lead, so long as no harm comes to anyone else. The principle is unassailable: we others have no right to intrude on their private practices so long as we can be quite sure that they are not injuring others. But it is getting harder and harder to be sure about when this is the case.

People make themselves dependent on many things. Some think they cannot live without daily newspapers and a free press, whereas others think they cannot live without cigarettes. Some think a life without music would not be worth living, and others think a life without religion would not be worth living. Are these addictions? Or are these genuine needs that we should strive to preserve, at almost any cost?

Eventually, we must arrive at questions about ultimate values, and no factual investigation could answer them. Instead, we can do no better than to sit down and reason together, a political process of mutual persuasion and education that we can try to conduct in good faith. But in order to do that we have to know what we are choosing between, and we need to have a clear account of the reasons that can be offered for and against the different visions of the participants. Those who refuse to participate (because they already know the answers in their hearts) are, from the point of view of the rest of us, part of the problem. Instead of being participants in our democratic effort to find agreement among our fellow human beings, they place themselves in the inventory of obstacles to be dealt with, one way or another. As with El Niño and global warming, there is no point in trying to argue with them, but every reason to study them assiduously, whether they like it or not. They may change their minds and rejoin our political congregation, and assist us in the exploration of the grounds for their attitudes and practices, but whether or not they do, it behooves the rest of us to learn everything we can about them, for they put at risk what we hold dear.

It is high time that we subject religion as a global phenomenon to the most intensive multidisciplinary research we can muster, calling on the best minds on the planet. Why? Because religion is too important for us to remain ignorant about. It affects not just our social, political, and economic conflicts, but the very meanings we find in our lives. For many people, probably a majority of the people on Earth, nothing matters more than religion. For this very reason, it is imperative that we learn as much as we can about it. That, in a nutshell, is the argument of this book.

Wouldn't such an exhaustive and invasive examination damage the phenomenon itself? Mightn't it break the spell? That is a good question, and I don't know the answer. Nobody knows the answer. That is why I raise the question, to explore it carefully now, so that we (1) don't rush headlong into inquiries we would all be much better off not undertaking, and yet (2) don't hide facts from ourselves that could guide us to better lives for all. The people on this planet confront a terrible array of problems — poverty, hunger, disease, oppression, the violence of war and crime, and many more — and in the twenty-first century we have unparalleled powers for doing something about all these problems. But what shall we do?

Good intentions are not enough. If we learned anything in the twentieth century, we learned this, for we made some colossal mistakes with the best of intentions. In the early decades of the century, communism seemed to many millions of thoughtful, wellintentioned people to be a beautiful and even obvious solution to the terrible unfairness that all can see, but they were wrong. An obscenely costly mistake. Prohibition also seemed like a good idea at the time, not just to power-hungry prudes intent on imposing their taste on their fellow citizens, but to many decent people who could see the terrible toll of alcoholism and figured that nothing short of a total ban would suffice. They were proven wrong, and we still haven't recovered from all the bad effects that well-intentioned policy set in motion. There was a time, not so long ago, when the idea of keeping blacks and whites in separate communities, with separate facilities, seemed to many sincere people to be a reasonable solution to pressing problems of interracial strife. It took the civil-rights movement in the United States, and the painful and humiliating experience of Apartheid and its eventual dismantling in South Africa, to show how wrong those well-intentioned people were to have ever believed this. Shame on them, you may say. They should have known better. That is my point. We can come to know better if we try our best to find out, and we have no excuse for not trying. Or do we? Are some topics off limits, no matter what the consequences?

Today, billions of people pray for peace, and I wouldn't be surprised if most of them believe with all their hearts that the best path to follow to peace throughout the world is a path that runs through their particular religious institution, whether it is Christianity, Judaism, Islam, Hinduism, Buddhism, or any of hundreds of other systems of religion. Indeed, many people think that the best hope for humankind is that we can bring together all of the religions of the world in a mutually respectful conversation and ultimate agreement on how to treat one another. They may be right, but they don't know. The fervor of their belief is no substitute for good hard evidence, and the evidence in favor of this beautiful hope is hardly overwhelming. In fact, it is not persuasive at all, since just as many people, apparently, sincerely believe that world peace is less important, in both the short run and the long, than the global triumph of their particular religion over its competition. Some see religion as the best hope for peace, a lifeboat we dare not rock lest we overturn it and all of us perish, and others see religious self-identification as the main source of conflict and violence in the world, and believe just as fervently that religious conviction is a terrible substitute for calm, informed reasoning. Good intentions pave both roads.

Who is right? I don't know. Neither do the billions of people with their passionate religious convictions. Neither do those atheists who are sure the world would be a much better place if all religion went extinct. There is an asymmetry: atheists in general welcome the most intensive and objective examination of their views, practices, and reasons. (In fact, their incessant demand for self-examination can become quite tedious.) The religious, in contrast, often bristle at the impertinence, the lack of respect, the sacrilege, implied by anybody who wants to investigate their views. I respectfully demur: there is indeed an ancient tradition to which they are appealing here, but it is mistaken and should not be permitted to continue. This spell must be broken, and broken now. Those who are religious and believe religion to be the best hope of humankind cannot reasonably expect those of us who are skeptical to refrain from expressing our doubts if they themselves are unwilling to put their convictions under the microscope. If they are right — especially if they are obviously right, on further reflection — we skeptics will not only concede this but enthusiastically join the cause. We want what they (mostly) say they want: a world at peace, with as little suffering as we can manage, with freedom and justice and well-being and meaning for all. If the case for their path cannot be made, this is something that they themselves should want to know. It is as simple as that. They claim the moral high ground; maybe they deserve it and maybe they don't. Let's find out.

4. Peering into the abyss

Philosophy is questions that may never be answered. Religion is answers that may never be questioned. — Anonymous

The spell that I say must be broken is the taboo against a forthright, scientific, no-holds-barred investigation of religion as one natural phenomenon among many. But certainly one of the most pressing and plausible reasons for resisting this claim is the fear that if that spell is broken — if religion is put under the bright lights and the microscope — there is a serious risk of breaking a different and much more important spell: the life-enriching enchantment of religion itself. If interference caused by scientific investigation somehow disabled people, rendering them incapable of states of mind that are the springboards for religious experience or religious conviction, this could be a terrible calamity. You can only lose your virginity once, and some are afraid that imposing too much knowledge on some topics could rob people of their innocence, crippling their hearts in the guise of expanding their minds. To see the problem, one has only to reflect on the recent global onslaught of secular Western technology and culture, sweeping hundreds of languages and cultures to extinction in a few generations. Couldn't the same thing happen to your religion? Shouldn't we leave well enough alone, just in case? What arrogant nonsense, others will scoff. The Word of God is invulnerable to the puny forays of meddling scientists. The presumption that curious infidels need tiptoe around to avoid disturbing the faithful is laughable, they say. But in that case, there would be no harm in looking, would there? And we might learn something important.

The first spell — the taboo — and the second spell — religion itself — are bound together in a curious embrace. Part of the strength of the second may be — may be — the protection it receives from the first. But who knows? If we are enjoined by the first spell not to investigate this possible causal link, then the second spell has a handy shield, whether it needs it or not. The relationship between these two spells is vividly illustrated in Hans Christian Andersen's charming fable "The Emperor's New Clothes." Sometimes falsehoods and myths that are "common wisdom" can survive indefinitely simply because the prospect of exposing them is itself rendered daunting or awkward by a taboo. An indefensible mutual presumption can be kept aloft for years or even centuries because each person assumes that somebody else has some very good reasons for maintaining it, and nobody dares to challenge it.

Up to now, there has been a largely unexamined mutual agreement that scientists and other researchers will leave religion alone, or restrict themselves to a few sidelong glances, since people get so upset at the mere thought of a more intensive inquiry. I propose to disrupt this presumption, and examine it. If we shouldn't study all the ins and outs of religion, I want to know why, and I want to see good, factually supported reasons, not just an appeal to the tradition I am rejecting. If the traditional cloak of privacy or "sanctuary" is to be left in place, we should know why we're doing this, since a compelling case can be made that we're paying a terrible price for our ignorance. This sets the order of business: First, we must look at the issue of whether the first spell — the taboo — should be broken. Of course, by writing and publishing this book I am jumping the gun, leaping in and trying to break the first spell, but one has to start somewhere. Before continuing further, then, and possibly making matters worse, I am going to pause to defend my decision to try to break that spell. Then, having mounted my defense for starting the project, I am going to start the project! Not by answering the big questions that motivate the whole enterprise but by asking them, as carefully as I can, and pointing out what we already know about how to answer them, and showing why we need to answer them.

I am a philosopher, not a biologist or an anthropologist or a sociologist or historian or theologian. We philosophers are better at asking questions than at answering them, and this may strike some people as a comical admission of futility — "He says his specialty is just asking questions, not answering them. What a puny job! And they pay him for this?" But anybody who has ever tackled a truly tough problem knows that one of the most difficult tasks is finding the right questions to ask and the right order to ask them in. You have to figure out not only what you don't know, but what you need to know and don't need to know, and what you need to know in order to figure out what you need to know, and so forth. The form our questions take opens up some avenues and closes off others, and we don't want to waste time and energy barking up the wrong trees. Philosophers can sometimes help in this endeavor, but of course they have often gotten in the way, too. Then some other philosopher has to come in and try to clean up the mess. I have always liked the way John Locke put it, in the "Epistle to the Reader" at the beginning of his Essay Concerning Human Understanding (1690):

. . . it is ambition enough to be employed as an under-labourer in clearing the ground a little, and removing some of the rubbish that lies in the way to knowledge; — which certainly had been very much more advanced in the world, if the endeavours of ingenious and industrious men had not been much cumbered with the learned but frivolous use of uncouth, affected, or unintelligible terms, introduced into the sciences, and there made an art of, to that degree that Philosophy, which is nothing but the true knowledge of things, was thought unfit or incapable to be brought into well-bred company and polite conversation.

Another of my philosophical heroes, William James, recognized as well as any philosopher ever has the importance of enriching your philosophical diet of abstractions and logical arguments with large helpings of hard-won fact, and just about a hundred years ago, he published his classic investigation, The Varieties of Religious Experience. It will be cited often in this book, for it is a treasure trove of insights and arguments, too often overlooked in recent times, and I will begin by putting an old tale he recounts to a new use:

A story which revivalist preachers often tell is that of a man who found himself at night slipping down the side of a precipice. At last he caught a branch which stopped his fall, and remained clinging to it in misery for hours. But finally his fingers had to loose their hold, and with a despairing farewell to life, he let himself drop. He fell just six inches. If he had given up the struggle earlier, his agony would have been spared. [James, 1902, p. 111]

Like the revivalist preacher, I say unto you, O religious folks who fear to break the taboo: Let go! Let go! You'll hardly notice the drop! The sooner we set about studying religion scientifically, the sooner your deepest fears will be allayed. But that is just a plea, not an argument, so I must persist with my case. I ask just that you try to keep an open mind and refrain from prejudging what I say because I am a godless philosopher, while I similarly do my best to understand you. (I am a bright. My essay "The Bright Stuff," in the New York Times, July 12, 2003, drew attention to the efforts of some agnostics, atheists, and other adherents of naturalism to coin a new term for us nonbelievers, and the large positive response to that essay helped persuade me to write this book. There was also a negative response, largely objecting to the term that had been chosen [not by me]: bright, which seemed to imply that others were dim or stupid. But the term, modeled on the highly successful hijacking of the ordinary word "gay" by homosexuals, does not have to have that implication. Those who are not gays are not necessarily glum; they're straight. Those who are not brights are not necessarily dim. They might like to choose a name for themselves. Since, unlike us brights, they believe in the supernatural, perhaps they would like to call themselves supers. It's a nice word with positive connotations, like gay and bright and straight. Some people would not willingly associate with somebody who was openly gay, and others would not willingly read a book by somebody who was openly bright. But there is a first time for everything. Try it. You can always back out later if it becomes too offensive.)

As you can already see, this is going to be something of a rollercoaster ride for both of us. I have interviewed many deeply religious people in the last few years, and most of these volunteers had never conversed with anybody like me about such topics (and I had certainly never before attempted to broach such delicate topics with people so unlike myself), so there were more than a few awkward surprises and embarrassing miscommunications. I learned a lot, but in spite of my best efforts I will no doubt outrage some readers, and display my ignorance of matters they consider of the greatest importance. This will give them a handy reason to discard my book without considering just which points in it they disagree with and why. I ask that they resist hiding behind this excuse and soldier on. They will learn something, and then they may be able to teach us all something.

Some people think it is deeply immoral even to consider reading such a book as this! For them, wondering whether they should read it would be as shameful as wondering whether to watch a pornographic videotape. The psychologist Philip Tetlock (1999, 2003, 2004) identifies values as sacred when they are so important to those who hold them that the very act of considering them is offensive. The comedian Jack Benny was famously stingy — or so he presented himself on radio and television — and one of his best bits was the skit in which a mugger puts a gun in his back and barks, "Your money or your life!" Benny just stands there silently. "Your money or your life!" repeats the mugger, with mounting impatience. "I'm thinking, I'm thinking," Benny replies. This is funny because most of us — religious or not — think that nobody should even think about such a trade-off. Nobody should have to think about such a trade-off. It should be unthinkable, a "no-brainer." Life is sacred, and no amount of money would be a fair exchange for a life, and if you don't already know that, what's wrong with you? "To transgress this boundary, to attach a monetary value to one's friendships, children, or loyalty to one's country, is to disqualify oneself from the accompanying social roles" (Tetlock et al., 2004, p. 5). That is what makes life a sacred value.

Tetlock and his colleagues have conducted ingenious (and sometimes troubling) experiments in which subjects are obliged to consider "taboo trade-offs," such as whether or not to purchase live human body parts for some worthy end, or whether or not to pay somebody to have a baby that you then raise, or pay somebody to perform your military service. As their model predicts, many subjects exhibit a strong "mere contemplation effect": they feel guilty and sometimes get angry about being lured into even thinking about such dire choices, even when they make all the right choices. When given the opportunity by the experimenters to engage in "moral cleansing" (by volunteering for some relevant community service, for instance), subjects who have had to think about taboo trade-offs are significantly more likely than control subjects to volunteer — for real — for such good deeds. (Control subjects had been asked to think about purely secular trade-offs, such as whether to hire a housecleaner or buy food instead of something else.) So this book may do some good by just increasing the level of charity in those who feel guilty reading it! If you feel yourself contaminated by reading this book, you will perhaps feel resentful, but also more eager than you otherwise would be to work off that resentment by engaging in some moral cleansing. I hope so, and you needn't thank me for inspiring you.

In spite of the religious connotations of the term, even atheists and agnostics can have sacred values, values that are simply not up for re-evaluation at all. I have sacred values — in the sense that I feel vaguely guilty even thinking about whether they are defensible and would never consider abandoning them (I like to think!) in the course of solving a moral dilemma. My sacred values are obvious and quite ecumenical: democracy, justice, life, love, and truth (in alphabetical order). But since I'm a philosopher, I've learned how to set aside the vertigo and embarrassment and ask myself what in the end supports even them, what should give when they conflict, as they often tragically do, and whether there are better alternatives. It is this traditional philosophers' open-mindedness to every idea that some people find immoral in itself. They think that they should be closed-minded when it comes to certain topics. They know that they share the planet with others who disagree with them, but they don't want to enter into dialogue with those others. They want to discredit, suppress, or even kill those others. While I recognize that many religious people could never bring themselves to read a book like this — that is part of the problem the book is meant to illuminate — I intend to reach as wide an audience of believers as possible. Other authors have recently written excellent books and articles on the scientific analysis of religion that are directed primarily to their fellow academics. My goal here is to play the role of ambassador, introducing (and distinguishing, criticizing, and defending) the main ideas of that literature. This puts my sacred values to work: I want the resolution to the world's problems to be as democratic and just as possible, and both democracy and justice depend on getting on the table for all to see as much of the truth as possible, bearing in mind that sometimes the truth hurts, and hence should sometimes be left concealed, out of love for those who would suffer were it revealed. But I'm prepared to consider alternative values and reconsider the priorities I find among my own.

5. Religion as a natural phenomenon

As every enquiry which regards religion is of the utmost importance, there are two questions in particular which challenge our attention, to wit, that concerning its foundation in reason, and that concerning its origin in human nature. — David Hume, The Natural History of Religion

What do I mean when I speak of religion as a natural phenomenon?

I might mean that it's like natural food — not just tasty but healthy, unadulterated, "organic." (That, at any rate, is the myth.) So do I mean: "Religion is healthy; it's good for you!"? This might be true, but it is not what I mean.

I might mean that religion is not an artifact, not a product of human intellectual activity. Sneezing and belching are natural, reciting sonnets is not; going naked — au naturel — is natural; wearing clothes is not. But it is obviously false that religion is natural in this sense. Religions are transmitted culturally, through language and symbolism, not through the genes. You may get your father's nose and your mother's musical ability through your genes, but if you get your religion from your parents, you get it the way you get your language, through upbringing. So of course that is not what I mean by natural.

With a slightly different emphasis, I might mean that religion is doing what comes naturally, not an acquired taste, or an artificially groomed or educated taste. In this sense, speaking is natural but writing is not; drinking milk is natural but drinking a dry martini is not; listening to tonal music is natural but listening to atonal music is not; gazing at sunsets is natural but gazing at late Picasso paintings is not. There is some truth to this: religion is not an unnatural act, and this will be a topic explored in this book. But it is not what I mean.

I might mean that religion is natural as opposed to supernatural, that it is a human phenomenon composed of events, organisms, objects, structures, patterns, and the like that all obey the laws of physics or biology, and hence do not involve miracles. And that is what I mean. Notice that it could be true that God exists, that God is indeed the intelligent, conscious, loving creator of us all, and yet still religion itself, as a complex set of phenomena, is a perfectly natural phenomenon. Nobody would think it was presupposing atheism to write a book subtitled Sports as a Natural Phenomenon or Cancer as a Natural Phenomenon. Both sports and cancer are widely recognized as natural phenomena, not supernatural, in spite of the well-known exaggerations of various promoters. (I'm thinking, for instance, of two famous touchdown passes known respectively as the Hail Mary and the Immaculate Reception, to say nothing of the weekly trumpetings by researchers and clinics around the world of one "miraculous" cancer cure or another.)

Sports and cancer are the subject of intense scientific scrutiny by researchers working in many disciplines and holding many different religious views. They all assume, tentatively and for the sake of science, that the phenomena they are studying are natural phenomena. This doesn't prejudge the verdict that they are. Perhaps there are sports miracles that actually defy the laws of nature; perhaps some cancer cures are miracles. If so, the only hope of ever demonstrating this to a doubting world would be by adopting the scientific method, with its assumption of no miracles, and showing that science was utterly unable to account for the phenomena. Miracle-hunters must be scrupulous scientists or else they are wasting their time — a point long recognized by the Roman Catholic Church, which at least goes through the motions of subjecting the claims of miracles made on behalf of candidates for sainthood to objective scientific investigation. So no deeply religious person should object to the scientific study of religion with the presumption that it is an entirely natural phenomenon. If it isn't entirely natural, if there really are miracles involved, the best way — indeed, the only way — to show that to doubters would be to demonstrate it scientifically. Refusing to play by these rules only creates the suspicion that one doesn't really believe that religion is supernatural after all.

In assuming that religion is a natural phenomenon, I am not prejudging its value to human life, one way or the other. Religion, like love and music, is natural. But so are smoking, war, and death. In this sense of natural, everything artificial is natural! The Aswan Dam is no less natural than a beaver's dam, and the beauty of a skyscraper is no less natural than the beauty of a sunset. The natural sciences take everything in Nature as their topic, and that includes both jungles and cities, both birds and airplanes, the good, the bad, the ugly, the insignificant, and the all-important as well.

Over two hundred years ago, David Hume wrote two books on religion. One was about religion as a natural phenomenon, and its opening sentence is the epigraph of this section. The other was about the "foundation in reason" of religion, his famous Dialogues Concerning Natural Religion (1779). Hume wanted to consider whether there was any good reason — any scientific reason, we might say — for believing in God. Natural religion, for Hume, would be a creed that was as well supported by evidence and argument as Newton's theory of gravitation, or plane geometry. He contrasted it with revealed religion, which depended on the revelations of mystical experience or other extra-scientific paths to conviction. I gave Hume's Dialogues a place of honor in my 1995 book, Darwin's Dangerous Idea — Hume is yet another of my heroes — so you might think that I intend to pursue this issue still further in this book, but that is not in fact my intention. This time I am pursuing Hume's other path. Philosophers have spent two millennia and more concocting and criticizing arguments for the existence of God, such as the Argument from Design and the Ontological Argument, and arguments against the existence of God, such as the Argument from Evil. Many of us brights have devoted considerable time and energy at some point in our lives to looking at the arguments for and against the existence of God, and many brights continue to pursue these issues, hacking away vigorously at the arguments of the believers as if they were trying to refute a rival scientific theory. But not I. I decided some time ago that diminishing returns had set in on the arguments about God's existence, and I doubt that any breakthroughs are in the offing, from either side. Besides, many deeply religious people insist that all those arguments — on both sides — simply miss the whole point of religion, and their demonstrated lack of interest in the arguments persuades me of their sincerity. Fine. So what, then, is the point of religion?

What is this phenomenon or set of phenomena that means so much to so many people, and why — and how — does it command allegiance and shape so many lives so strongly? That is the main question I will address here, and once we have sorted out and clarified (not settled) some of the conflicting answers to this question, it will give us a novel perspective from which to look, briefly, at the traditional philosophical issue that some people insist is the only issue: whether or not there are good reasons for believing in God. Those who insist that they know that God exists and can prove it will have their day in court.6

Chapter 1 Religions are among the most powerful natural phenomena on the planet, and we need to understand them better if we are to make informed and just political decisions. Although there are risks and discomforts involved, we should brace ourselves and set aside our traditional reluctance to investigate religious phenomena scientifically, so that we can come to understand how and why religions inspire such devotion, and figure out how we should deal with them all in the twenty-first century.

Chapter 2 There are obstacles confronting the scientific study of religion, and there are misgivings that need to be addressed. A preliminary exploration shows that it is both possible and advisable for us to turn our strongest investigative lights on religion.

CHAPTER TWO

Some Questions About Science

1. Can science study religion?

To be sure, man is, zoologically speaking, an animal. Yet, he is a unique animal, differing from all others in so many fundamental ways that a separate science for man is well-justified. — Ernst Mayr, The Growth of Biological Thought

There has been some confusion about whether the earthly manifestations of religion should count as a part of Nature. Is religion outof- bounds to science? It all depends on what you mean. If you mean the religious experiences, beliefs, practices, texts, artifacts, institutions, conflicts, and history of H. sapiens, then this is a voluminous catalogue of unquestionably natural phenomena. Considered as psychological states, drug-induced hallucination and religious ecstasy are both amenable to study by neuroscientists and psychologists. Considered as the exercise of cognitive competence, memorizing the periodic table of elements is the same sort of phenomenon as memorizing the Lord's Prayer. Considered as examples of engineering, suspension bridges and cathedrals both obey the law of gravity and are subject to the same sorts of forces and stresses. Considered as salable manufactured goods, both mystery novels and Bibles fall under the regularities of economics. The logistics of holy wars do not differ from the logistics of entirely secular conflicts. "Praise the Lord and pass the ammunition!" as the World War II song said. A crusade or a jihad can be investigated by researchers in many disciplines, from anthropology and military history to nutrition and metallurgy.

In his book Rocks of Ages (1999), the late Stephen Jay Gould defended the political hypothesis that science and religion are two "non-overlapping magisteria" — two domains of concern and inquiry that can coexist peacefully as long as neither poaches on the other's special province. The magisterium of science is factual truth on all matters, and the magisterium of religion, he claimed, is the realm of morality and the meaning of life. Although Gould's desire for peace between these often warring perspectives was laudable, his proposal found little favor on either side, since in the minds of the religious it proposed abandoning all religious claims to factual truth and understanding of the natural world (including the claims that God created the universe, or performs miracles, or listens to prayers), whereas in the minds of the secularists it granted too much authority to religion in matters of ethics and meaning. Gould exposed some clear instances of immodest folly on both sides, but the claim that all conflict between the two perspectives is due to overreaching by one side or the other is implausible, and few readers were persuaded. But whether or not the case can be made for Gould's proposal, my proposal is different. There may be some domain that is religion's alone to command, some realm of human activity that science can't properly address and religion can, but that does not mean that science cannot or should not study this very fact. Gould's own book was presumably a product of just such a scientific investigation, albeit a rather informal one. He looked at religion with the eyes of a scientist and thought he could see a boundary that revealed two domains of human activity. Was he right? That is presumably a scientific, factual question, not a religious question. I am not suggesting that science should try to do what religion does, but that it should study, scientifically, what religion does.

One of the surprising discoveries of modern psychology is how easy it is to be ignorant of your own ignorance. You are normally oblivious of your own blind spot, and people are typically amazed to discover that we don't see colors in our peripheral vision. It seems as if we do, but we don't, as you can prove to yourself by wiggling colored cards at the edge of your vision — you'll see motion just fine but not be able to identify the color of the moving thing. It takes special provoking like that to get the absence of information to reveal itself to us. And the absence of information about religion is what I want to draw to everyone's attention. We have neglected to gather a wealth of information about something of great import to us.

This may come as a surprise. Haven't we been looking carefully at religion for a long time? Yes, of course. There have been centuries of insightful and respectful scholarship about the history and variety of religious phenomena. This work, like the bounty gathered by dedicated bird-watchers and other nature lovers before Darwin's time, is proving to be a hugely valuable resource to those pioneers who are now beginning, for the first time really, to study the natural phenomena of religion through the eyes of contemporary science. Darwin's breakthrough in biology was enabled by his deep knowledge of the wealth of empirical details scrupulously garnered by hundreds of pre-Darwinian, non-Darwinian natural historians. Their theoretical innocence was itself an important check on his enthusiasm; they had not gathered their facts with an eye to proving Darwinian theory correct, and we can be equally grateful that almost all the "natural history of religion" that has been accumulated to date is, if not theoretically innocent, at least oblivious to the sorts of theories that now may be supported or undercut by it.

The research to date has hardly been neutral, however. We don't just walk up to religious phenomena and study them point-blank, as if they were fossils or soybeans in a field. Researchers tend to be either respectful, deferential, diplomatic, tentative — or hostile, invasive, and contemptuous. It is just about impossible to be neutral in your approach to religion, because many people view neutrality in itself as hostile. If you're not for us, you're against us. And so, since religion so clearly matters so much to so many people, researchers have almost never even attempted to be neutral; they have tended to err on the side of deference, putting on the kid gloves. It is either that or open hostility. For this reason, there has been an unfortunate pattern in the work that has been done. People who want to study religion usually have an ax to grind. They either want to defend their favorite religion from its critics or want to demonstrate the irrationality and futility of religion, and this tends to infect their methods with bias. Such distortion is not inevitable. Scientists in every field have pet theories they hope to confirm, or target hypotheses they yearn to demolish, but, knowing this, they take a variety of tried-and-true steps to prevent their bias from polluting their evidence-gathering: double-blind experiments, peer review, statistical tests, and many other standard constraints of good scientific method. But in the study of religion, the stakes have often been seen to be higher. If you think that the disconfirmation of a hypothesis about one religious phenomenon or another would not be just an undesirable crack in the foundation of some theory but a moral calamity, you tend not to run all the controls. Or so, at least, it has often seemed to observers.

That impression, true or false, has created a positive feedback loop: scientists don't want to deal with second-rate colleagues, so they tend to shun topics where they see what they take to be mediocre work being done. This self-selection is a frustrating pattern that begins when students think about "choosing a major" in college. The best students typically shop around, and if they are unimpressed by the work they are introduced to in the first course in a field, they cross that field off their list for good. When I was an undergraduate, physics was still the glamour field, and then the race to the moon drew more than its share of talent. (A fossil trace is the phrase "Hey, it's not rocket science.") This was followed by computer science for a while, and all along — for half a century and more — biology, especially molecular biology, has attracted many of the smartest. Today, cognitive science and the various strands of evolutionary biology — bio-informatics, genetics, developmental biology — are on the rise. But through all this period, sociology and anthropology, social psychology, and my own home field, philosophy, have struggled along, attracting those whose interests match the field well, including some brilliant people, but having to combat somewhat unenviable reputations. As my old friend and former colleague, Nelson Pike, a respected philosopher of religion, once ruefully put it:

If you are in a company of people of mixed occupations, and somebody asks what you do, and you say you are a college professor, a glazed look comes into his eye. If you are in a company of professors from various departments, and somebody asks what is your field, and you say philosophy, a glazed look comes into his eye. If you are at a conference of philosophers, and somebody asks you what you are working on, and you say philosophy of religion . . . [Quoted in Bambrough, 1980]

This is not just a problem for philosophers of religion. It is equally a problem for sociologists of religion, psychologists of religion, and other social scientists — economists, political scientists — and for those few brave neuroscientists and other biologists who have decided to look at religious phenomena with the tools of their trade. One of the factors is that people think they already know everything they need to know about religion, and this received wisdom is pretty bland, not provocative enough to inspire either refutation or extension. In fact, if you set out to design an impermeable barrier between scientists and an underexplored phenomenon, you could hardly do better than to fabricate the dreary aura of low prestige, backbiting, and dubious results that currently envelops the topic of religion. And since we know from the outset that many people think such research violates a taboo, or at least meddles impertinently in matters best left private, it is not so surprising that few good researchers, in any discipline, want to touch the topic. I myself certainly felt that way until recently.

These obstacles can be overcome. In the twentieth century, a lot was learned about how to study human phenomena, social phenomena. Wave after wave of research and criticism has sharpened our appreciation of the particular pitfalls, such as biases in datagathering, investigator-interference effects, and the interpretation of data. Statistical and analytical techniques have become much more sophisticated, and we have begun setting aside the old oversimplified models of human perception, emotion, motivation, and control of action and replacing them with more physiologically and psychologically realistic models. The yawning chasm that was seen to separate the sciences of the mind (Geisteswissenschaften) from the natural sciences (Naturwissenschaften) has not yet been bridged securely, but many lines have been flung across the divide. Mutual suspicion and professional jealousy as well as genuine theoretical controversy continue to shake almost all efforts to carry insights back and forth on these connecting routes, but every day the traffic grows. The question is not whether good science of religion as a natural phenomenon is possible: it is. The question is whether we should do it.

2. Should science study religion?

Look before you leap. — Aesop, "The Fox and the Goat"

Research is expensive and sometimes has harmful side effects. One of the lessons of the twentieth century is that scientists are not above confabulating justifications for the work they want to do, driven by insatiable curiosity. Are there in fact good reasons, aside from sheer curiosity, to try to develop the natural science of religion? Do we need this for anything? Would it help us choose policies, respond to problems, improve our world? What do we know about the future of religion? Consider five wildly different hypotheses:

1. The Enlightenment is long gone; the creeping "secularization" of modern societies that has been anticipated for two centuries is evaporating before our eyes. The tide is turning and religion is becoming more important than ever. In this scenario, religion soon resumes something like the dominant social and moral role it had before the rise of modern science in the seventeenth century. As people recover from their infatuation with technology and material comforts, spiritual identity becomes a person's most valued attribute, and populations come to be ever more sharply divided among Christianity, Islam, Judaism, Hinduism, and a few other major multinational religious organizations. Eventually — it might take another millennium, or it might be hastened by catastrophe — one major faith sweeps the planet.

2. Religion is in its death throes; today's outbursts of fervor and fanaticism are but a brief and awkward transition to a truly modern society in which religion plays at most a ceremonial role. In this scenario, although there may be some local and temporary revivals and even some violent catastrophes, the major religions of the world soon go just as extinct as the hundreds of minor religions that are vanishing faster than anthropologists can record them. Within the lifetimes of our grandchildren, Vatican City becomes the European Museum of Roman Catholicism, and Mecca is turned into Disney's Magic Kingdom of Allah.

3. Religions transform themselves into institutions unlike anything seen before on the planet: basically creedless associations selling self-help and enabling moral teamwork, using ceremony and tradition to cement relationships and build "long-term fan loyalty." In this scenario, being a member of a religion becomes more and more like being a Boston Red Sox fan, or a Dallas Cowboys fan. Different colors, different songs and cheers, different symbols, and vigorous competition — would you want your daughter to marry a Yankees fan? — but aside from a rabid few, everybody appreciates the importance of peaceful coexistence in a Global League of Religions. Religious art and music flourish, and friendly rivalry leads to a degree of specialization, with one religion priding itself on its environmental stewardship, providing clean water for the world's billions, while another becomes duly famous for its concerted defense of social justice and economic equality.

4. Religion diminishes in prestige and visibility, rather like smoking; it is tolerated, since there are those who say they can't live without it, but it is discouraged, and teaching religion to impressionable young children is frowned upon in most societies and actually outlawed in others. In this scenario, politicians who still practice religion can be elected if they prove themselves worthy in other regards, but few would advertise their religious affiliation — or affliction, as the politically incorrect insist on calling it. It is considered as rude to draw attention to the religion of somebody as it is to comment in public about his sexuality or whether she has been divorced.

5. Judgment Day arrives. The blessed ascend bodily into heaven, and the rest are left behind to suffer the agonies of the damned, as the Antichrist is vanquished. As the Bible prophecies foretold, the rebirth of the nation of Israel in 1948 and the ongoing conflict over Palestine are clear signs of the End Times, when the Second Coming of Christ sweeps all the other hypotheses into oblivion.

Other possibilities are describable, of course, but these five hypotheses highlight the extremes that are taken seriously. What is remarkable about the set is that just about anybody would find at least one of them preposterous, or troubling, or even deeply offensive, but every one of them is not just anticipated but yearned for. People act on what they yearn for. We are at cross-purposes about religion, to say the least, so we can anticipate problems, ranging from wasted effort and counterproductive campaigns if we are lucky to all-out war and genocidal catastrophe if we are not.

Only one of these hypotheses (at most) will turn out to be true; the rest are not just wrong but wildly wrong. Many people think they know which is true, but nobody does. Isn't that fact, all by itself, enough reason to study religion scientifically? Whether you want religion to flourish or perish, whether you think it should transform itself or stay just as it is, you can hardly deny that whatever happens will be of tremendous significance to the planet. It would be useful to your hopes, whatever they are, to know more about what is likely to happen and why. In this regard, it is worth noting how assiduously those who firmly believe in number 5 scan the world news for evidence of prophecies fulfilled. They sort and evaluate their sources, debating the pros and cons of various interpretations of those prophecies. They think there is a reason to investigate the future of religion, and they don't even think the course of future events lies within human power to determine. The rest of us have all the more reason to investigate the phenomena, since it is quite obvious that complacency and ignorance could lead us to squander our opportunities to steer the phenomena in what we take to be the benign directions.